An RTS panel examines the improvements and challenges that machine learning can bring to news organisations.

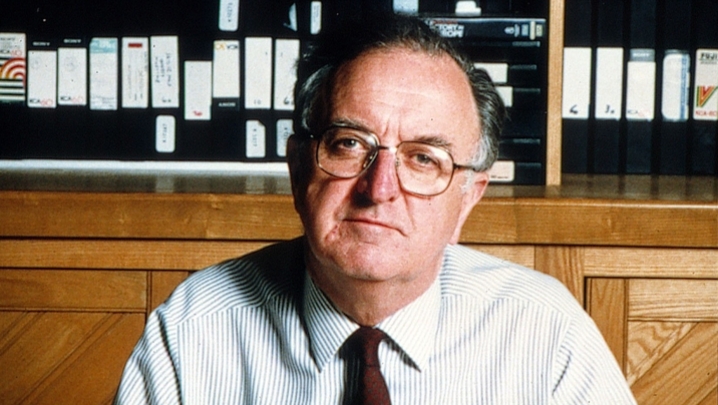

If you thought that AI is not yet having an impact on news organisations, think again. As panellist and data journalism pioneer Gary Rogers reminded this absorbing RTS discussion, “AI: the new frontier for journalism”, the Press Association’s Radar service – which he set up – has been using machine learning to create news stories for the past five years. Radar says it generates around 150,000 stories utilising local data journalism each year for clients across the UK.

“There are very good, well-trusted tools out there,” said Rogers. “You can use AI tools to enable journalists to do jobs they can’t do by human effort alone.” It was helpful to regard AI as “an assistant, not a replacement for journalism”.

He added: “There is an opportunity for news companies here. If it becomes more difficult to discern what’s true and what’s not, then people need to turn to trusted brands and people they can rely on. They will turn to big broadcasters and big news brands, which will further your reputation and trust and win some things back.”

One of those big news brands was represented by Tami Hoffman, ITN’s Director of News Distribution and Commercial Innovation. She was more sceptical of the benefits that AI – in particular, generative AI – could provide for broadcast journalists and for ITN as a commercial organisation.

Her boss, ITN CEO Rachel Corp, recently urged the Government to take a “proactive stance” to protect journalism from the impact of AI in the run-up to national elections due to be held in the UK and US next year.

Reassuringly, the CEO said at the time that, “AI will never be able to replicate the work of journalists who gather information and contextualise it by building relationships and being eyewitnesses to events as they occur”. But AI could take some of the grunt work out of journalism.

'It’s frightening that products of this kind are being unleashed without the checks that we expect’

Let’s hope she’s right but, as this discussion made clear, there is concern around the impact AI is likely to have on broadcast news, most of which prides itself on being accurate and impartial.

Hoffman was worried on several counts, starting with the fear that AI might infringe ITN’s copyrights. She wanted strict rules on licencing ITN content in connection with AI (ITN’s news archive goes back to 1955) and for the Government to get its act together on regulating AI.

She warned: “More safety checks are involved in creating a toilet cleaner or a lipstick than unleashing a generative AI model on the public and our children.… It’s frightening that products of this kind are being unleashed without the checks we expect. Government [routinely] asks companies to ensure their products are safe; it has been very remiss in that this is being launched without any safety checks at all.”

Hoffman said that journalists needed to be more sceptical than ever, and staff needed educating on AI. She reminded the RTS that “we still don’t have legislation to control social media and we’re 20 years on from that. The Digital Media Bill is still going through Parliament. In Denmark, they’re introducing a cultural tax on streamers.”

She suggested that a levy be introduced on companies that benefit from data scraping, the process whereby machines extract huge amounts of text and images from online sources.

Hoffman used the example of people seeking information on the failed Russian mutiny on 24 June to illustrate how the public and news organisations such as ITN could lose out from this practice.

“If you want to read about what happened in Russia that weekend, ask Google and you will get a selection of different stories from different sites, which you can then go to, and the traffic registers on those sites,” she explained. “But put it into Bard or ChatGPT and they will scrape together various stories from the internet and provide a three-line summary.

“If that’s all you want, you might not go further. You won’t know where that three-line summary has been pulled from, or what biases it has or the political weighting.”

So much for having an informed democracy. Data scraping could also have a detrimental impact on the financial stability of ITN and its peers, noted Hoffman, who emphasised: “If machines are being taught using our material, we want a licence [payment] for that in the way we would expect a licence for anything.”

Rogers explained that successful deployment of any AI technology was completely dependent on humans: “Unless your people know what they are doing, it’s never going to work. You can’t rely on the machine. If you put rubbish in, you’ll get rubbish out.

“Data needs to be clean and correct, otherwise we’re not going to be able to do anything with it. You can’t rely on the machine, you have to check everything. At some point, anything you’re planning to put in front of an audience needs to go through a human filter. Think of it as a colleague who can occasionally be unreliable. Checks and balances need to be in place and be designed into your system. You can’t just hope that the machine will get things right.”

So, could regulators help? Ali-Abbas Ali, Director of Broadcasting Competition at Ofcom, said that, “in the first instance, the onus is on the newsroom. If you hold a broadcast licence, you have an ongoing responsibility to comply with the broadcasting code. We expect you to protect audiences from harm and to maintain high levels of trust in broadcast news.”

He continued: “We’re looking for broadcasters to unleash the creativity and efficiency of AI but to do it responsibly. That means investing in the people who understand how the tools work and ensuring you have bulletproof contracts in place with suppliers.”

That may prove easier said than done. Ali said the audience needed to be educated in terms of media literacy and to develop the critical faculties to be able to realise when things were fake.

Grown-ups were more likely to believe in conspiracy theories than teenagers if the experience of his family was anything to go by: “The younger generation are developing that critical understanding. I get more fake memes from my uncle than I do from my daughter.”

Then there are the biases inherent in AI searches and their impact on the way minorities are depicted in the results. Ali said ethnic-minority groups know they will be under-represented, considering the stock of text and images being used to train AI. That text will also contain tropes with racial stereotypes and “be rife with homophobia”.

He added: “The Equalities Act still applies when you are using AI. When you use these tools, you’ve got to understand what your responsibilities are under the law.

“You are not relieved of those responsibilities when you use a tool that you don’t understand properly. The investment everybody needs to make in their ability to understand the tools is significant. I think that will be a challenge because [of the very small] number of people who understand the tools.… There are not that many people like Gary Rogers out there.”

Report by Steve Clarke. ‘AI: the new frontier for journalism’ was an RTS National Event held on 29 June. It was chaired by Symeon Brown, Channel 4 News correspondent and host of AI Watch. The producers were Ashling O’Connor and Lisa Campbell.